What is the Flynn Effect?

Is each generation really smarter than the previous one? Will our children be able to drive progress forward, or will they drown in consumerism and information noise? Is it truly possible to predict a person's success in adulthood based on intelligence test data from childhood?

These important questions have been the subject of years of debate, largely thanks to the work of New Zealand philosopher and psychologist James Robert Flynn. In 1984, he published his study concluding that the average intelligence quotient (IQ) score has increased over time. Analyzing test data from Americans of the same age between 1932 and 1978, Flynn found that the average IQ score increased by about 3 points per decade. Flynn continued his research, and three years later published a new study analyzing data from 14 different countries, which fully confirmed the observed pattern—average IQ scores had a clear positive correlation with the timeline. Studies by other scientists in various countries also confirmed the steady and nearly linear rise in median IQ scores throughout the 20th century.

Although Flynn never called this pattern after himself, his work became so popular and widely cited that the term “Flynn Effect” became firmly established in scientific literature. Flynn himself preferred to name this phenomenon after psychology professor Reed Tuddenham, who was the first to notice increasing intelligence test scores in his work.

In this article, we will explain what IQ tests actually measure, discuss the main arguments for and against the existence of the Flynn Effect, and explore possible reasons for its rise and eventual decline.

The Problem of IQ Tests

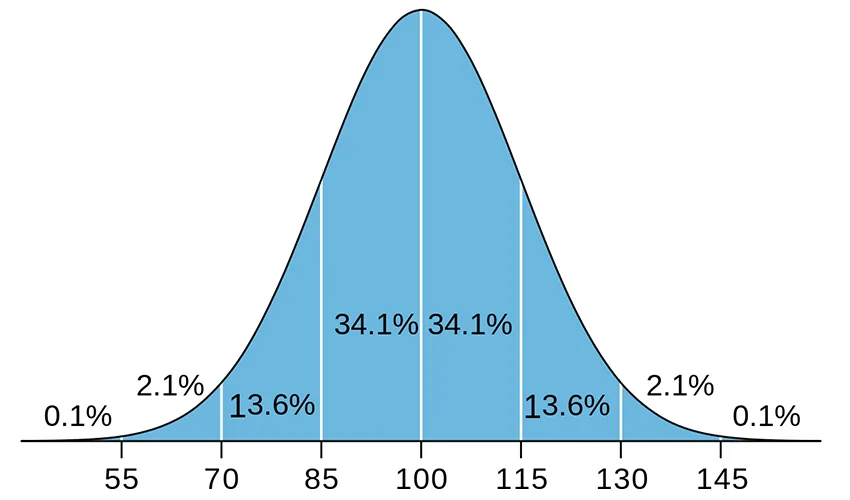

The Flynn Effect cannot be considered without addressing the fundamental issues with IQ tests. All intelligence tests are designed to produce a normal distribution of results, with an average score of 100 points. Over time, tests are recalibrated and updated based on new samples of results to ensure the median score remains 100.

For example, if you test a large enough group of people and get an average score of 105, you will be forced to adjust the test so that the average score becomes 100 again. In other words, someone who scored 105 yesterday would score only 100 today because the standards have been raised.

This also works the other way around: if we could test people from the early 20th century using modern standards, the average score would be around 70. Does this mean our ancestors were on the verge of intellectual disability? Not at all—this is simply how the system of normal distribution works.

The need to constantly define the median score to maintain a normal distribution is what allowed researchers to notice the increasing average IQ over time.

The graph above shows the distribution of results from a standard IQ test

Can IQ Tests Measure Intelligence?

But do IQ tests really measure intelligence, or do they simply measure test-taking ability? It’s clear that these are not the same thing. Even if we accept pattern recognition as a part of intelligence, a set of logical tasks cannot serve as a comprehensive measure of human intelligence. Studies show that retaking a standard IQ test (such as the Raven’s Progressive Matrices) on the same day can increase the score by several points. Has the person gotten smarter in just a few hours, or have they simply improved their ability to solve similar tasks?

Critics of “measuring” intelligence through tests offer several strong arguments. For instance, many point to the lack of correlation between rising IQ scores among children and success in school subjects. Test results in reading and math across various countries do not show the same increase in average results as IQ scores. In some countries, like the U.S., the average score has even dropped.

If each new generation is truly smarter, why hasn’t this been reflected in practical testing of school subjects? It can be confidently stated that IQ test results correlate with cognitive abilities, but they clearly do not serve as a universal measure of intelligence. Many researchers agree that IQ reflects only certain cognitive abilities rather than intelligence in its full sense. Therefore, too much practical importance should not be placed on such a score.

Despite these obvious shortcomings, IQ scores can still significantly impact the life of the person being tested. For example, in the U.S., a person can be legally declared intellectually disabled based on their IQ test result, which can affect their eligibility for benefits and the ability to manage their own property. A court may also determine that a person with a low IQ did not fully understand the consequences of their criminal actions and may reduce their sentence.

How Average IQ Has Changed Across Generations

Nevertheless, from a statistical point of view, Flynn’s conclusions are absolutely correct—throughout the 20th century, the average IQ steadily increased.

Historically, intelligence tests were most widely used in Western European countries for conscripts into regular armies. Therefore, Flynn and other researchers could not ignore the vast amounts of available data.

For example, the average IQ among young men in the Netherlands increased by 20 points between 1952 and 1982. During the same period, the IQ score among Danish conscripts increased by 21 points. Similar results were obtained in industrially developed Asian countries such as Japan and South Korea. Data from Eastern and Southern Europe also confirmed a rise, although it was not as pronounced as in Northern and Western European countries. Other studies among children and women also showed a significant increase in the median score, although it differed from men’s results, where the data sample was always the largest. For example, the median score on the standard Raven’s Progressive Matrices test for British children increased by 14 points from 1938 to 2008. In African countries, studies had the smallest samples, but the Flynn Effect was confirmed in South Africa, Kenya, and other countries.

Upon closer examination of the results, it becomes clear that the increase in average IQ is primarily due to a reduction in the number of extremely low scores. In other words, over time, fewer people are failing the test and getting very low scores. Although the number of people achieving very high scores remains almost unchanged, the average score continues to rise.

The rise in average IQ in most industrialized countries was almost linear until the 1980s, with an increase of about 0.3 points per year, or about 3 points per decade. Starting in the 1980s, the rate slowed to 0.2 points per year, and by the late 1990s, the average IQ score began to decline. Many studies conducted in the 21st century confirm the continuous decline in average scores across nearly all age groups. This has led to discussions about the waning of the effect or the so-called “reverse Flynn Effect.”

What Caused the Flynn Effect?

Why did the average IQ continuously increase throughout most of the 20th century? This period saw fundamental changes in the structure of society. Scientific discoveries and technological progress rapidly transformed daily life, requiring individuals to adapt and develop abstract thinking, which had not been in high demand throughout earlier evolutionary processes. Vaccines for infectious diseases were developed, access to information increased, people began to eat better and receive better education. It is likely that the Flynn Effect was not caused by a single factor but rather by a combination of these elements. Several major theories attempt to explain the phenomenon of rising average IQ. Below, we will examine the arguments for and against each one.

Genetic Theory

This theory suggests that the abilities of hybrid offspring will always surpass those of previous generations. Revolutions, the creation of new states, local wars, and two world wars led to significant population movement throughout the 20th century. Proponents of genetic theory believe that innate intelligence exists and point to the increase in mixed marriages as the primary reason for the rise in this innate intelligence among offspring. Supporting this theory, twin studies—where twins were separated at a young age and raised in different environments—show that in adulthood, the twins' IQs tend to be very similar, despite differences in education and upbringing.

Counterargument: Studies conducted in regions with minimal migration also demonstrated significant increases in average IQ throughout the 20th century.

Education and the Practice Effect

It is an undeniable fact that education became more accessible to wider social groups throughout the 20th century. Literacy rates increased, and the average length of time spent in education grew. The need to absorb larger volumes of information and grasp more complex concepts contributed to the development of abstract thinking. As the length of time spent in education increased, so did the frequency of interaction with logical problems and with the very concept of testing as a tool for knowledge assessment. Supporting this theory, children who attend school consistently perform better on IQ tests than those who do not.

Research shows that test-taking frequency positively correlates with test results. Therefore, it is unsurprising that each new generation becomes more prepared for IQ testing. Many tasks in IQ tests follow the same problem-solving principles, so once you learn how to solve one, you can apply that knowledge to others. Thus, it is possible to simply learn how to solve IQ tests. This is called the practice effect, but such an explanation brings us back to the question of the validity of IQ tests as a tool for measuring intelligence.

Counterargument: Despite overall improvements in the education system, the Flynn Effect is observed unevenly, even across different regions of the same country (e.g., in the U.S.),casting doubt on the universality of this factor.

Medicine

Throughout its history, humanity has suffered from epidemics of deadly diseases. However, it was in the 20th century that vaccines for smallpox, polio, measles, mumps, whooping cough, diphtheria, and tetanus were developed. Mass vaccination saved millions of lives. The discovery of antibiotics and antimalarial drugs revolutionized medical practice, improving both the quality and longevity of human life worldwide.

Most researchers agree that the reduction in infectious disease allowed children to develop normally, without facing the dangerous consequences and complications of illnesses. It is highly likely that reducing the impact of infectious diseases contributed to improved cognitive abilities.

Counterarguments: Critics point to African and South Asian countries, where infectious diseases remained a significant problem throughout the 20th century, especially among children. Yet, these countries also saw an increase in average IQ.

According to this theory, the rise in IQ should halt in countries where infectious diseases have been brought under control. However, in countries like Sweden and the Netherlands, infectious diseases were already minimal by the 1950s, yet the average IQ continued to rise for several more decades.

Nutrition

Supporters of the nutrition theory point to data showing improvements in the quality of nutrition throughout most of the 20th century. Reducing deficiencies in essential micronutrients, particularly in childhood, may have led to significant improvements in cognitive functions. Research indicates that the simple spread of iodized salt has a clear positive correlation with the rise in average IQ.

Supporting this theory is the fact that the average height of people today is about 10 centimeters taller than it was 100 years ago. This increase is due to improved access to a more diverse diet. Along with the increase in height, brain size has also grown, which could positively affect cognitive functions. Another argument for this theory comes from studies in remote African villages, where an increase in average IQ was also observed. In these areas, other factors like education, access to information, or medicine were unlikely to have had a significant influence, whereas nutrition did become more varied.

Counterargument: Nutrition in Asian countries did not change as significantly over the 20th century as it did in Europe or America. The average height and brain size of Asians also did not increase substantially. Nevertheless, the Flynn Effect was observed in almost all Asian countries. Additionally, there are countries and regions that experienced several years of famine, where diets were extremely poor and monotonous. Yet, scientists did not find significant deviations in IQ among individuals whose childhood or time in the womb coincided with these periods.

Social and Cultural Changes

The structure of society underwent significant changes during the 20th century. Urbanization increased, with more people concentrated in large metropolitan areas. Meanwhile, families began to have fewer children, and the number of children per family decreased from generation to generation. Having fewer children, combined with rising economic prosperity, allowed more attention to be given to their upbringing and development. Work processes modernized, requiring less physical strength and more cognitive effort. Society became more competitive, increasing the need for professional qualifications to achieve economic success. With the spread of radio and television, the process of receiving information changed, along with how it was analyzed. The brain was forced to learn how to quickly switch between different tasks and use abstract thinking to generalize and structure the constant influx of new data.

Counterarguments: This hypothesis applies primarily to industrialized economies. In rural areas of India and Africa, where families remained large and societal changes in work processes and information consumption were minimal, an increase in average IQ was also observed. Another argument against this hypothesis is the fact that the average IQ also rose among groups where work does not require a high level of cognitive involvement.

The Reverse Flynn Effect: Why IQ Is No Longer Increasing

While the Flynn Effect suggests that each subsequent generation is more capable than the previous one, the Reverse Flynn Effect points to an opposite trend: each following generation shows a declining IQ. For example, a large study from Northwestern University revealed that between 2006 and 2018, the IQ of American adults consistently declined, regardless of their level of education, gender, or age. Data indicating a drop in average IQ over the past 20 to 40 years is also supported by research from other countries. Even Flynn himself noted a decrease of around two points in the IQ of British 14-year-olds between 1980 and 2008.

So why has the average IQ stopped rising in the 21st century? While no definitive answers exist, researchers have proposed several key theories to explain the Reverse Flynn Effect:

Genetic and Demographic Factors

Some scientists believe that the increasing age of motherhood negatively impacts the intellectual abilities of children. Moreover, some studies have found a positive correlation between a woman's IQ and her decision not to have children. Thus, a portion of women with higher IQs may not pass on their genes. At the same time, individuals with lower IQs tend to have more children. According to proponents of the genetic theory, this could contribute to the decline in the average intelligence of each successive generation.

Nutrition

Over the past 30 years, there has been a dramatic increase in the consumption of refined sugar, palm oil, artificial colorings, preservatives, formaldehydes, trans fats, and synthetic food additives. These substances affect molecular systems and cellular processes.

Research shows that an increase in these substances in people's diets weakens cognitive function and contributes to rising rates of depression. For instance, serotonin, the key neurotransmitter responsible for appetite, sleep, and mood, is 95% dependent on your diet. As such, the quality of food directly affects the brain and all bodily processes.

An interesting study compared the average diet in "developed" countries with that of Japan and southern European countries. It found that those who follow a "Western" diet have a 30% higher chance of developing depression. Naturally, it's hard to think clearly when in a depressed state, which reduces concentration and ultimately leads to a decline in average IQ scores.

Air Pollution

Some researchers attribute the Reverse Flynn Effect to environmental pollution, particularly air pollution. It has long been known that poor air quality leads to increased lung diseases, but this study demonstrated that air pollution also directly impairs cognitive function and contributes to the development of dementia.

Changes in Society

One theory suggests that changing lifestyles are to blame for declining IQ scores. For instance, the average person today spends much less time reading compared to 30 years ago, while devoting more time to social media, which does little to enhance abstract thinking or cognitive abilities. Within this framework, it is also argued that there has been a general degradation of school and higher education standards, with a shift toward narrow specialization.

Cultural trends in certain age groups may also play a role, such as the notion that academic achievement or career success is no longer "cool" among some youth. This creates a less stimulating environment, even for young people who are otherwise eager to learn.

The Impact of Flynn's Research on Society and Education

What practical significance do the studies of James Flynn and other researchers in cognitive development hold? In fact, they have had a profound impact on many areas of life.

First, the approach to evaluating intelligence has shifted. IQ tests are no longer considered the gold standard, and their influence on an individual’s future has significantly decreased. For example, children can no longer be placed in special education solely based on IQ test results. Courts are also less likely to accept IQ test scores as proof of intellectual disability, and employers rarely use them to assess candidates’ abilities. This has led to the development of more comprehensive methods for assessing personality and intelligence, and a growing interest in practical skill testing within specific areas of knowledge.

Second, the scientific community has re-evaluated the nature of intelligence as a genetically predetermined trait. Theories about multiple types of intelligence, such as Raymond Cattell’s theory of fluid intelligence and its potential for development, have gained popularity.

The understanding that cognitive abilities can improve under various conditions has undermined the belief in the intellectual superiority of one race over another. This shift in perspective has significantly impacted policies aimed at combating racial inequality.

Third, discussions of social justice and discrimination have intensified. Society has become more aware of the role that social and economic factors play in cognitive development. This has led to increased support for disadvantaged groups at both government and private initiative levels. Widespread programs that support low-income families have improved access to healthcare and educational opportunities for these groups.

Finally, Flynn’s research has driven changes in educational programs, particularly in early childhood development, where there is now greater emphasis on nurturing both social and cognitive skills. Additionally, the realization that intelligence can develop at any age has fueled the rapid growth of adult education programs and courses.

Conclusion

Will our grandchildren be smarter than us thanks to the Flynn Effect, or less intelligent due to the Reverse Flynn Effect? The answer to this question remains uncertain. What is clear is that each generation adapts to the challenges it faces. Our ancestors were certainly not less intelligent than we are, considering the environments and problems they had to deal with. The conditions in which future generations live will also depend, in part, on us.

You've reached the end of this article in your pursuit of knowledge, which already suggests a commendable level of intelligence. Share this article with friends, promote science and self-education. Now you know to approach IQ test results with a healthy dose of skepticism and are ready to take on the challenge. We recommend trying the following:

Standard Raven's Progressive Matrices

And to take a break from classic IQ tests: